From Reactive to Active: The Always-On AI Development Revolution

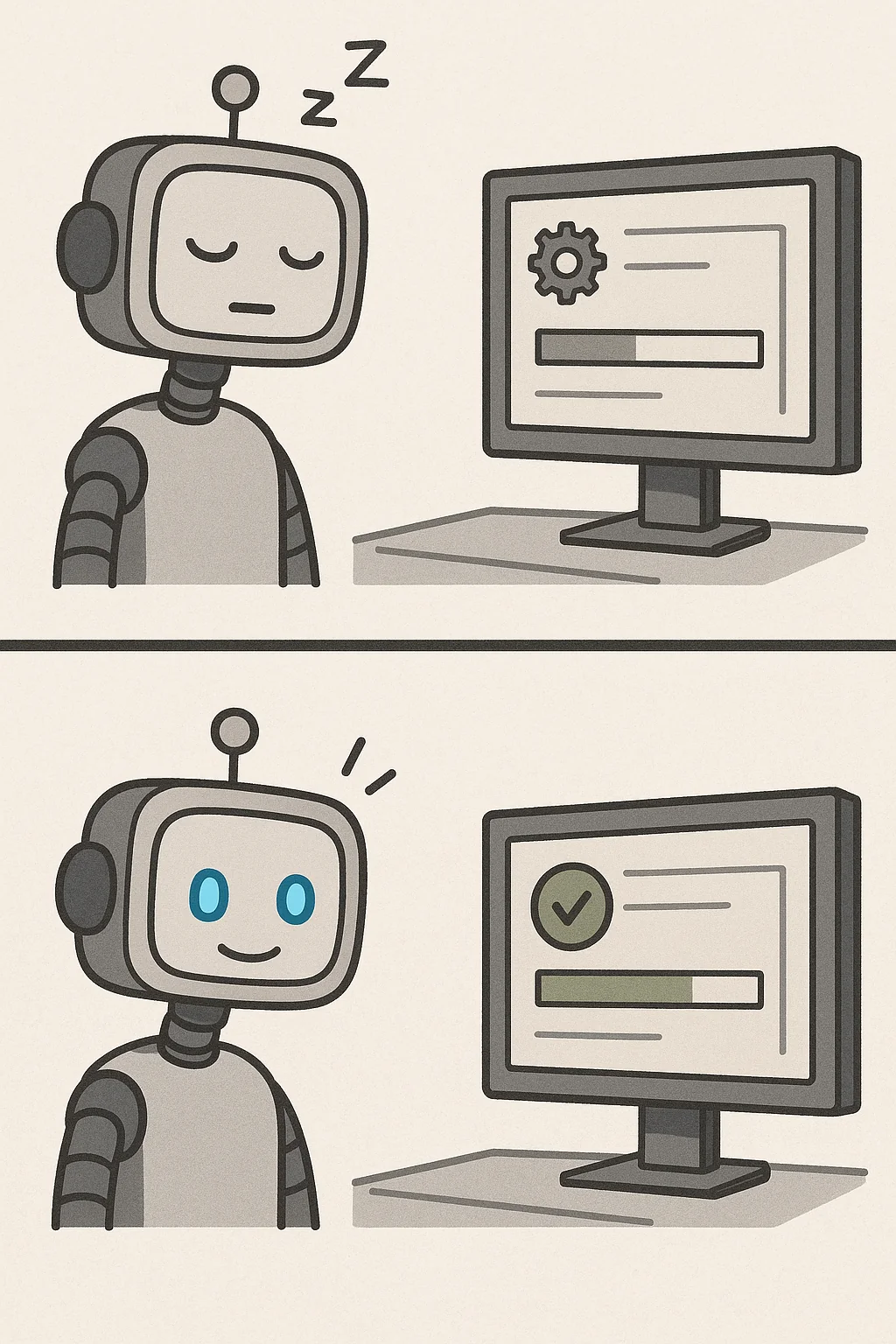

We noticed something remarkable while working with Claude in our terminal. Large Language Models are fundamentally passive—they respond to prompts, provide answers, then wait dormant until the next human input. This has been the defining characteristic of every AI interaction since the beginning.

But there's an emergent capability that changes everything. AI agents can now execute sleep commands and schedule their own activity. This transforms them from passive responders into active participants that can monitor, wait, and return with updates autonomously.

The Observation That Changes Everything

While experimenting with Claude Code in our development workflow, we discovered something that fundamentally shifts how we think about AI: AI agents can put themselves to sleep and wake up on schedule. This seemingly simple capability unlocks a complete paradigm shift from passive tools to active infrastructure.

Here's what we observed in practice. We were starting a PostgreSQL container that takes a few minutes to fully initialize:

# Starting a PostgreSQL container

docker run -d --name postgres-dev \

-e POSTGRES_PASSWORD=devpass \

-e POSTGRES_DB=testdb \

-p 5432:5432 \

postgres:15

Instead of manually checking if it was ready, Claude did this:

# Claude's autonomous monitoring approach

echo "Starting PostgreSQL container monitoring..."

docker logs postgres-dev --tail=10

# Claude then says: "I can see the container is starting up but not ready yet.

# The logs show 'database system is starting up'. I'll wait 30 seconds and check again."

sleep 30 && echo "Checking PostgreSQL startup progress..." && docker logs postgres-dev --tail=5

# Claude continues: "Still initializing. I can see 'PostgreSQL init process complete'

# but waiting for 'database system is ready to accept connections'.

# I'll check again in 45 seconds."

sleep 45 && echo "Final PostgreSQL readiness check..." && \

docker logs postgres-dev | grep "ready to accept connections" && \

echo "✅ PostgreSQL is now ready for connections!"

The AI agent schedules itself to check back, analyzes the logs autonomously, and only surfaces when the container is actually ready. No human prompting required between checks.

What This Means in Practice

This simple observation unlocks profound possibilities. Instead of the traditional cycle of:

- Start a process

- Manually check if it's done

- Wait and check again

- Repeat until complete

We can now delegate the entire monitoring cycle to Claude:

# Starting a Node.js application that takes time to initialize

docker run -d --name node-app -p 3000:3000 node:18 \

sh -c "npm install && npm start"

# Claude autonomously monitors the startup

echo "Monitoring Node.js application startup..."

docker logs node-app --tail=5

# Claude reports: "I can see 'npm install' is running. The logs show package installation in progress.

# I'll wait 60 seconds for dependencies to install, then check again."

sleep 60 && echo "Checking npm install progress..." && docker logs node-app --tail=10

# Claude continues: "Dependencies installed. Now I see 'npm start' executing.

# Waiting for 'Server listening on port 3000'. I'll check again in 30 seconds."

sleep 30 && echo "Checking server startup..." && \

docker logs node-app | grep "listening on port" && \

echo "✅ Node.js server is now running and accepting connections!"

The AI agent manages its own activity cycle, only surfacing when the application is actually ready.

The Infrastructure Transformation

This observation reveals something profound: AI can transform from a tool we use into infrastructure that runs alongside our systems. The implications are far-reaching:

From Sessions to Services

Traditional AI interactions are session-based—we start a conversation, get help, and the session ends. But what we've observed is AI operating as a service—always running, always monitoring, only surfacing when needed.

Time-Aware Intelligence

By combining sleep commands with timestamp analysis, AI agents become genuinely time-aware. We've seen Claude:

- Parse log timestamps to understand event sequences

- Calculate time deltas between operations

- Detect patterns in system behavior over time

- Schedule its own activity based on expected system rhythms

# Claude analyzing our application logs over time

echo "Analyzing error patterns from the last hour..." && \

grep "ERROR" /var/log/application.log | \

awk '{print $1, $2}' | \

sort | uniq -c | \

while read count timestamp; do

echo "Found $count errors at $timestamp"

done && \

echo "Checking again in an hour for new patterns..." && \

sleep 3600

Self-Managing Compute

Current AI implementations have artificial limits (like 20-call constraints), but these are temporary. Remove those limits, and AI agents can manage their own compute resources—sleeping when idle, waking when needed, optimizing their own activity patterns based on what they observe.

The Always-On Vision

What we're seeing points toward AI infrastructure that's always running in the cloud. Instead of spinning up AI agents when we need them, they become persistent services that:

- Monitor our systems continuously without our oversight

- Scale their activity based on system load and events

- Coordinate with each other across different monitoring domains

- Surface insights proactively rather than reactively

We can envision our development infrastructure with AI agents like:

# Multiple autonomous agents running simultaneously in our cloud environment

Agent-1: Monitoring our production deployments

Agent-2: Watching our database performance metrics

Agent-3: Tracking our CI/CD pipeline health

Agent-4: Analyzing user behavior patterns on our applications

Agent-5: Managing our infrastructure scaling decisions

Each agent would operate independently, manage its own sleep/wake cycles, and only require our attention when intervention is needed.

Why This Changes Everything

This isn't just about automation—it's about cognitive load reduction. Instead of context-switching between development work and system monitoring, we can delegate the watching to AI agents that maintain context over time.

The shift from reactive to active AI means:

- Reduced mental overhead: We don't need to remember to check things

- Faster incident response: AI agents detect issues immediately

- Better system understanding: Continuous monitoring reveals patterns we miss

- Scalable oversight: We can oversee many autonomous agents simultaneously

The Technical Foundation

This transformation is possible because of several converging capabilities:

- Terminal-native AI tools like Claude Code that can execute system commands

- Sleep command support that enables self-scheduling

- Timestamp awareness through log analysis and system integration

- Persistent context that maintains monitoring state across sleep cycles

- Cloud AI infrastructure that can run agents continuously

What's Coming Next

We believe we're at the beginning of this transformation. Current implementations have artificial limits, but the core capability exists today. As these constraints are removed, we expect to see:

- AI agent orchestration platforms for managing multiple autonomous agents

- Cross-system monitoring where agents coordinate across different infrastructure layers

- Predictive intervention where agents don't just monitor but anticipate issues

- Self-optimizing systems where AI agents tune their own monitoring patterns

The reactive AI era is ending. The always-on, active AI infrastructure era is beginning.

This emergent capability represents one of the most significant developments in AI tooling since conversational interfaces. The ability for AI agents to schedule themselves transforms them from tools we use into infrastructure that works alongside us.